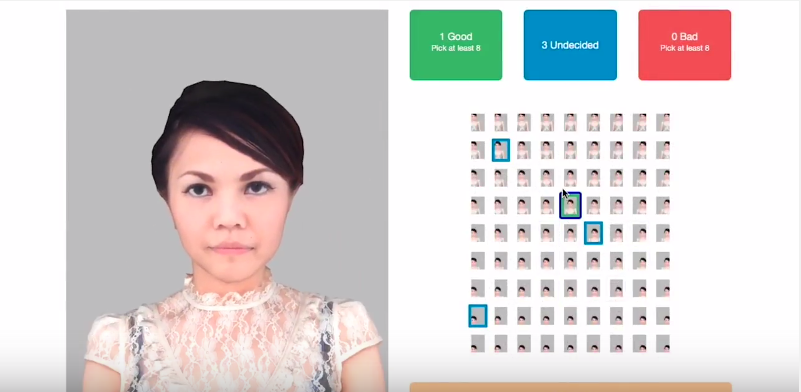

Credit: Prof. Daniel Vogel University of Waterloo

Let’s be honest at one time or another we have all tried to take a selfie on our smartphones. There are plenty of people that seem to have mastered this not so vital skill, but really a lot of the pictures don’t really come out so well. So it becomes a question of moving around to find just the right light and just keep snapping away until you get the photo you like. Well, brothers and sisters, you’re gonna really like what science has cooked up for you selfie addicts. A team of University of Waterloo in Canada computer scientists led by Professor Dan Vogel have developed a smartphone app that essentially senses where to position your phone to get the best picture possible. In this writers case probably in the darkest exposures possible.

Not to be confused with other apps that pretty us up after the photo is taken, the U. of Waterloo team developed an algorithm that takes into account lighting direction, face position and size to guide you to take the optimal selfie. The Waterloo team took a very interesting modern approach to building and testing their algorithm. They took hundreds of ‘virtual selfies’ changing the lighting direction, face position and face size in each photo. Next, they hired an online crowdsourcing service, so that thousands of people would vote on which virtual selfie out of the hundreds they thought was best. They used the voting results to mathematically model and develop their algorithm for taking the best selfie. Prof Vogel feels the team will be able to make improvements on the app. by taking new factors into account: “We can expand the variables to include variables aspects such as hairstyle, types of smile or even the outfit you wear.”

Transcript of Video Below: