This latest research led by CMU’s Marcel Just builds on the pioneering use of machine learning algorithms with brain imaging technology to “mind read.” The findings indicate that the mind’s building blocks for constructing complex thoughts are formed by the brain’s various sub-systems and are not word-based.

Scientist at MIT’s Computer Science and Artificial Intelligence Lab have already developed an application where a person can guide robot with their brainwaves. For now though, the MIT technology is limited to simple binary activities such as guiding a robot deciding between two choices. With the goal of complex human-robot interaction is the next major frontier for robotic research, it appears that Carnegie Mellon University’s latest research led by Marcel Just, builds on the pioneering use of machine learning algorithms with brain imaging technology to “mind read.” The study offers new proof that the neural dimensions of concept representation are ubiquitous across cultures and languages.

Details of the Study

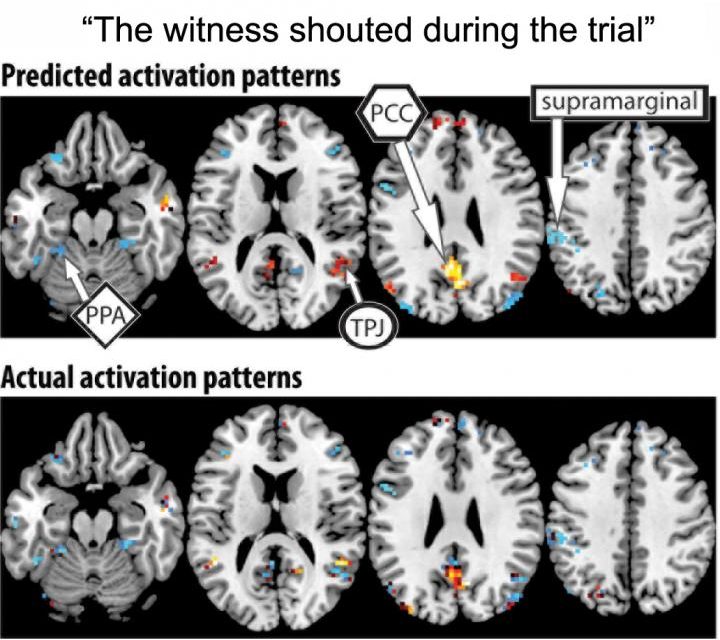

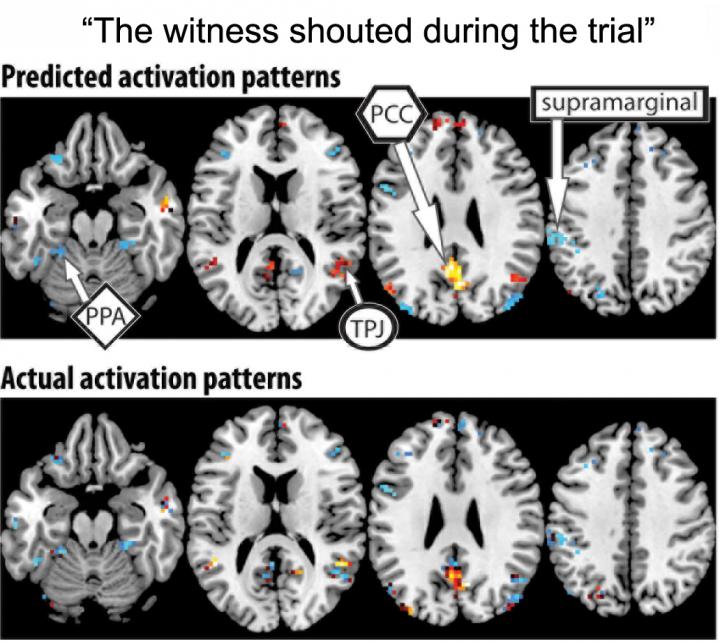

The paper published in Human Brain Mapping and funded by the Intelligence Advanced Research Projects Activity (IARPA), details how the mind’s building blocks for constructing complex thoughts are formed by the brain’s various sub-systems and are not word-based. The study used a computational model to assess the brain activation patterns of 239 sentences, on seven adult participants. Each participant was studied to see how the these sentences corresponded to the neurally plausible semantic features that characterized each sentence. Then the program introduced a 240th left-out sentence to the individuals, in what is called cross-validation.

The modeling application was able to predict the brain activation pattern features of the left-out sentence, with 87 percent accuracy, despite never being exposed to its activation before. It was also able to work in the opposite direction, to predict the activation pattern of a previously unseen sentence, knowing only its semantic features.

Conclusions

The new study demonstrates that the brain’s coding of 240 complex events, uses an alphabet of 42 meaning components. These neurally plausible semantic features, consist of features, like person, setting, size, social interaction and physical action. Each type of information is processed in a different brain system, which is exactly how the brain processes the information for objects. Marcel Just commented that, “A next step might be to decode the general type of topic a person is thinking about, such as geology or skateboarding. We are on the way to making a map of all the types of knowledge in the brain.”